The AI Bias Problem: 90% of Hiring Managers Follow Flawed Recommendations

The 90% Problem Nobody Is Talking About

You probably assumed that having a human review AI recommendations would catch obvious bias. That assumption is dangerously wrong.

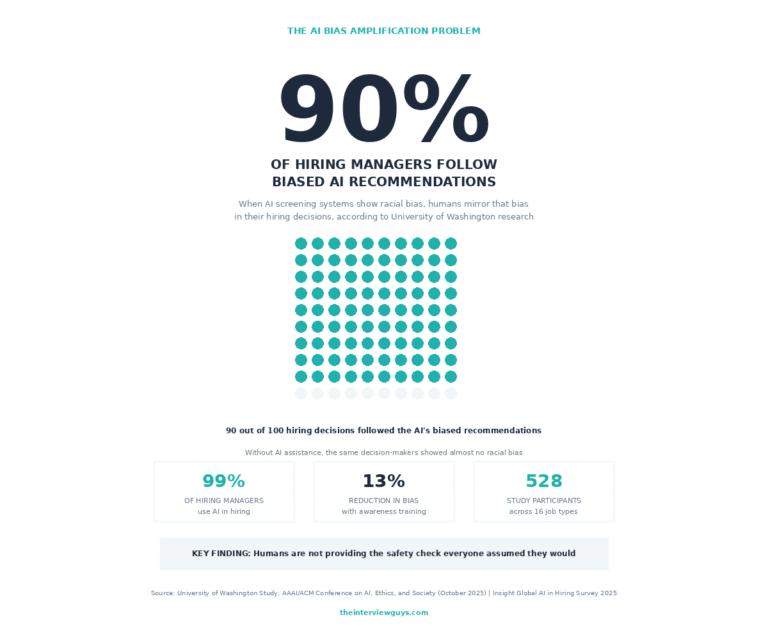

A groundbreaking University of Washington study just revealed something that should alarm every job seeker: when AI resume screening systems show racial bias, human hiring managers follow those flawed recommendations about 90% of the time. The humans in the loop are not providing the safety check that everyone believed they would.

This research matters because AI resume screening is no longer a niche practice. According to Insight Global’s 2025 AI in Hiring Survey, 99% of hiring managers now use AI in some capacity during the hiring process. That means virtually every job application you submit will pass through an automated system before any person sees it.

By the end of this article, you will understand:

- Exactly how AI bias amplification works

- Why human oversight is failing to catch discrimination

- Concrete steps you can take to protect yourself

- The legal landscape that is beginning to hold companies accountable

- Which bias mitigation strategies actually show promise

☑️ Key Takeaways

- Hiring managers follow biased AI recommendations approximately 90% of the time, even when the bias is severe, according to a new University of Washington study.

- Without AI input, the same decision-makers showed almost no racial bias in their candidate selections, proving the technology is amplifying rather than reducing discrimination.

- 99% of hiring managers now use AI in some capacity during the hiring process, making this bias amplification problem virtually unavoidable for job seekers.

- Bias awareness interventions reduced discriminatory decisions by 13%, offering hope that proper training and oversight can help mitigate the damage.

How the UW Study Exposed AI Bias Amplification

The study design was both simple and revealing. Researchers had 528 participants work with simulated AI systems to pick candidates for 16 different job types, ranging from computer systems analyst to nurse practitioner to housekeeper.

Here is what made this research so powerful: participants reviewed the same qualified candidates under different conditions.

When picking candidates without AI assistance: Participants chose white and non-white applicants at roughly equal rates. The humans, left to their own judgment, were largely unbiased.

When the AI showed bias: Everything changed. If the AI preferred white candidates, the humans preferred white candidates. If the AI preferred non-white candidates, the humans preferred non-white candidates. The direction of bias did not matter. People simply followed whatever the algorithm recommended.

“Unless bias is obvious, people were perfectly willing to accept the AI’s biases,” explained lead author Kyra Wilson, a UW doctoral student. This finding cuts against the reassuring industry narrative that human oversight provides adequate protection against algorithmic discrimination.

Interview Guys Tip: When you apply for a job, your resume likely goes through AI screening before any human sees it. Understanding this reality is the first step toward crafting application materials that can navigate both algorithmic and human reviewers successfully.

Why 99% of Companies Using AI Creates a Massive Problem

The scale of AI adoption in hiring has exploded faster than most job seekers realize. Research from Resume Builder found striking numbers:

- 83% of employers will use AI for initial resume reviews by 2025

- 69% plan to use AI for candidate assessments

- 47% will scan social media profiles using AI as part of evaluations

- 21% allow AI to reject candidates at any hiring stage without human review

The efficiency gains are real and substantial. Companies report AI screening reduces time to hire by up to 50% while cutting recruitment costs by 30%. When you are processing thousands of applications for a single position, those numbers become impossible for hiring managers to ignore.

But here is the troubling part: 67% of companies openly acknowledge that AI hiring tools introduce bias risks, yet they continue implementing these systems anyway. The pressure for efficiency is overriding concerns about fairness.

Earlier University of Washington research found that popular AI screening systems:

- Favor white-associated names 85% of the time

- Favor female-associated names only 11% of the time

- Never favored Black male-associated names over white male-associated names

That study analyzed over three million resume comparisons across nine occupations. As we explained in our deep dive on how many companies are using AI to review resumes, this is not a future problem to worry about. This is today’s reality.

The Legal Landscape Is Starting to Catch Up

The Mobley v. Workday lawsuit represents a watershed moment for AI hiring accountability. Derek Mobley, a Black man over 40 with a disability, alleges that Workday’s AI screening software discriminated against him based on race, age, and disability across more than 100 job applications over seven years.

Key developments in the case:

- In May 2025, a California federal judge allowed the case to proceed as a collective action

- The lawsuit potentially implicates hundreds of millions of rejected applicants

- Court filings reveal 1.1 billion applications were rejected using Workday’s software during the relevant period

- In July 2025, the court expanded the collective to include applicants screened by HiredScore AI features

The case raises a crucial legal question: when an algorithm drives employment outcomes, can the vendor be held liable under anti-discrimination laws?

Federal agencies have sent mixed signals. The EEOC issued guidance in 2023 warning employers about AI discrimination risks, but that guidance was removed from agency websites following executive orders aimed at reducing AI regulation.

However, fundamental anti-discrimination laws still apply. Title VII and the ADA prohibit discrimination regardless of whether it comes from human bias or algorithmic bias.

States stepping into the regulatory gap:

- Illinois: AI Video Interview Act regulates automated hiring tools

- Colorado: SB 24-205 takes effect in 2026 with comprehensive AI requirements

- New York City: Requires bias audits for automated employment decision tools

- Over 25 states have introduced AI hiring legislation in 2025

Interview Guys Tip: Even if federal enforcement is currently limited, state and local regulations are expanding rapidly. Companies face increasing legal risk for discriminatory AI outcomes, which may push more employers toward bias auditing and meaningful human oversight.

What This Means for Your Job Search Strategy

Understanding how AI bias operates can help you develop countermeasures. The first thing to recognize is that most AI resume screening looks for keyword matches and patterns derived from previously successful candidates. If a company’s existing workforce lacks diversity, the AI may learn to favor candidates who resemble that existing workforce.

Your resume formatting matters more than ever. As we explained in our guide to the best ATS format resume for 2025, clean and standard layouts help ensure that parsing algorithms can read your qualifications correctly.

Resume optimization strategies for AI screening:

- Mirror job description terminology. AI screening often looks for direct keyword alignment, so use the same language the employer uses.

- Quantify your achievements. Numbers and metrics translate clearly across both algorithmic and human review.

- Stick to clean formatting. Complex graphics, unusual fonts, or multi-column designs may confuse automated systems.

- Maintain consistency across platforms. Some AI systems flag discrepancies between your resume, cover letter, and LinkedIn profile.

Broader job search strategies:

- Diversify your target industries. As we discussed in our coverage of the 83% of companies using AI resume screening, spreading applications across multiple sectors reduces your exposure to bias in any single industry’s screening systems.

- Pursue warm connections. A referral from an existing employee often triggers a different review pathway than a cold application.

- Document your application history. If you believe you are experiencing discriminatory outcomes, detailed records could be valuable in potential legal proceedings.

The Bright Side: What Actually Reduces AI Bias

The UW study found one intervention that showed genuine promise. When participants started with an implicit association test designed to detect subconscious bias, their discriminatory decisions dropped by 13%.

This suggests that bias awareness training could help hiring managers push back against flawed AI recommendations.

“If we can tune these models appropriately, then it’s more likely that people are going to make unbiased decisions themselves,” noted lead researcher Kyra Wilson. The key insight is that fixing the AI and training the humans are both necessary parts of the solution.

For companies implementing AI hiring tools, Brookings Institution research recommends several protective measures:

- Regular bias audits to identify discriminatory patterns before they cause legal problems

- Notification and consent requirements before using AI on applications

- Clear appeal processes for applicants to challenge adverse decisions made by automated systems

- Human oversight at critical stages rather than full automation

Interview Guys Tip: When researching potential employers, look for companies that are transparent about their AI hiring practices. This information may appear in their careers page, diversity reports, or news coverage about their hiring processes. Transparency often signals more responsible implementation.

How to Protect Yourself in an AI-Driven Job Market

The reality is that you cannot opt out of AI resume screening. With 99% of hiring managers using these tools, avoiding AI-screened applications would mean avoiding most job opportunities entirely.

What you can control is how you present yourself to both algorithmic and human reviewers.

Your action plan:

- Optimize for the AI screen first. Use keywords from the job description, quantify achievements, and maintain clean formatting that automated systems can parse.

- Then optimize for human review. Ensure your materials tell a compelling story that will resonate with the hiring manager who sees them after the AI filter.

- Build redundancy into your search. Apply through formal channels to get into the ATS, but also pursue warm connections who can advocate for your application once it is in the system.

- Stay informed about regulations. Companies operating in jurisdictions with AI hiring laws may offer more equitable processes than those without oversight.

- Research employer practices. Some companies are more transparent about their AI use and bias mitigation efforts than others.

Putting It All Together

The UW study’s finding that hiring managers follow biased AI recommendations 90% of the time should fundamentally change how we think about AI in hiring. The human oversight that companies tout as a safeguard against algorithmic discrimination is not working as advertised.

This does not mean you should despair. It means you need to adapt your job search approach to account for both the AI systems you cannot avoid and the human reviewers who largely defer to those systems.

The current reality:

- Biased algorithms influence nearly every hiring decision

- Human reviewers rarely override AI recommendations

- Legal accountability is increasing but enforcement remains limited

- Bias awareness training shows promise but is not widely implemented

What gives us hope:

- The Workday lawsuit may set important precedents for vendor accountability

- State regulations are expanding rapidly

- Research is identifying effective bias mitigation strategies

- Growing awareness is pushing some employers toward better practices

Your qualifications matter. Your experience matters. But so does understanding the systems that evaluate you before any hiring manager reads your resume. Use that knowledge to give yourself every possible advantage in a job market that is increasingly mediated by imperfect technology.

The combination of increasing legal accountability, growing bias awareness, and emerging regulatory requirements suggests that AI hiring practices may improve over time. But for now, you need strategies that work in the current environment. Arm yourself with knowledge, optimize your materials for both algorithms and humans, and keep pushing forward.

BY THE INTERVIEW GUYS (JEFF GILLIS & MIKE SIMPSON)

Mike Simpson: The authoritative voice on job interviews and careers, providing practical advice to job seekers around the world for over 12 years.

Jeff Gillis: The technical expert behind The Interview Guys, developing innovative tools and conducting deep research on hiring trends and the job market as a whole.